Learn About MEG and Electromagnetic Brain Mapping at the Medical College of Wisconsin

Data Preprocessing

Data filtering is a conceptually simple, though powerful technique to extract signals within a predefined frequency band of interest. This off-line data pre-processing step is the realm of digital filtering: an important and sophisticated subfield of electrical engineering (Hamming, 1983). Applying a filter to the data presupposes that the information carried by signals will be mostly preserved, to the benefit of attenuating other frequency components of supposedly, no interest.

Not every digital filter is suitable to the analysis of MEG/EEG traces. Indeed, the performances of filters are defined from basic characteristics such as the attenuation outside the bandpass of the frequency response, stability, computational efficiency and most importantly, the introduction of phase delays. This latter is a systematic by-product of filtering and some filters may be particularly inappropriate in that respect: infinite impulse response (IIR) digital filters are usually more computationally efficient than finite impulse response (FIR) alternatives, but with the inconvenient of introducing non-linear frequency-dependent phase delays; hence some non-equal delays in the temporal domain at all frequencies, which is unacceptable for MEG/EEG signal analysis where timing and phase measurements are crucial. FIR filters delay signals in the time domain equally at all frequencies, which can be conveniently compensated for by applying the filter twice: once forward and once backward on the MEG/EEG time series (Oppenheim, Schafer, & Buck, 1999).

Note however some possible edge effects of the FIR filter at the beginning and end of the time series, and the necessity of a large number of time samples when applying filters with low high-pass cutoff frequencies (as the length of the filter’s FIR increases). Hence it is generally advisable to apply digital high-pass filters on longer episodes of data, such as on the original ‘raw’ recordings, before these latter are chopped into epochs of shorter durations about each trial for further analysis.

Bringing more details to the discussion would reach outside the scope of these pages. The investigator should nevertheless be well aware of the potential pitfalls of analysis techniques in general, and of digital filters in particular. Although commercial software tools are well equipped with adequate filter functions, in-house or academic software solutions should be first evaluated with great caution.

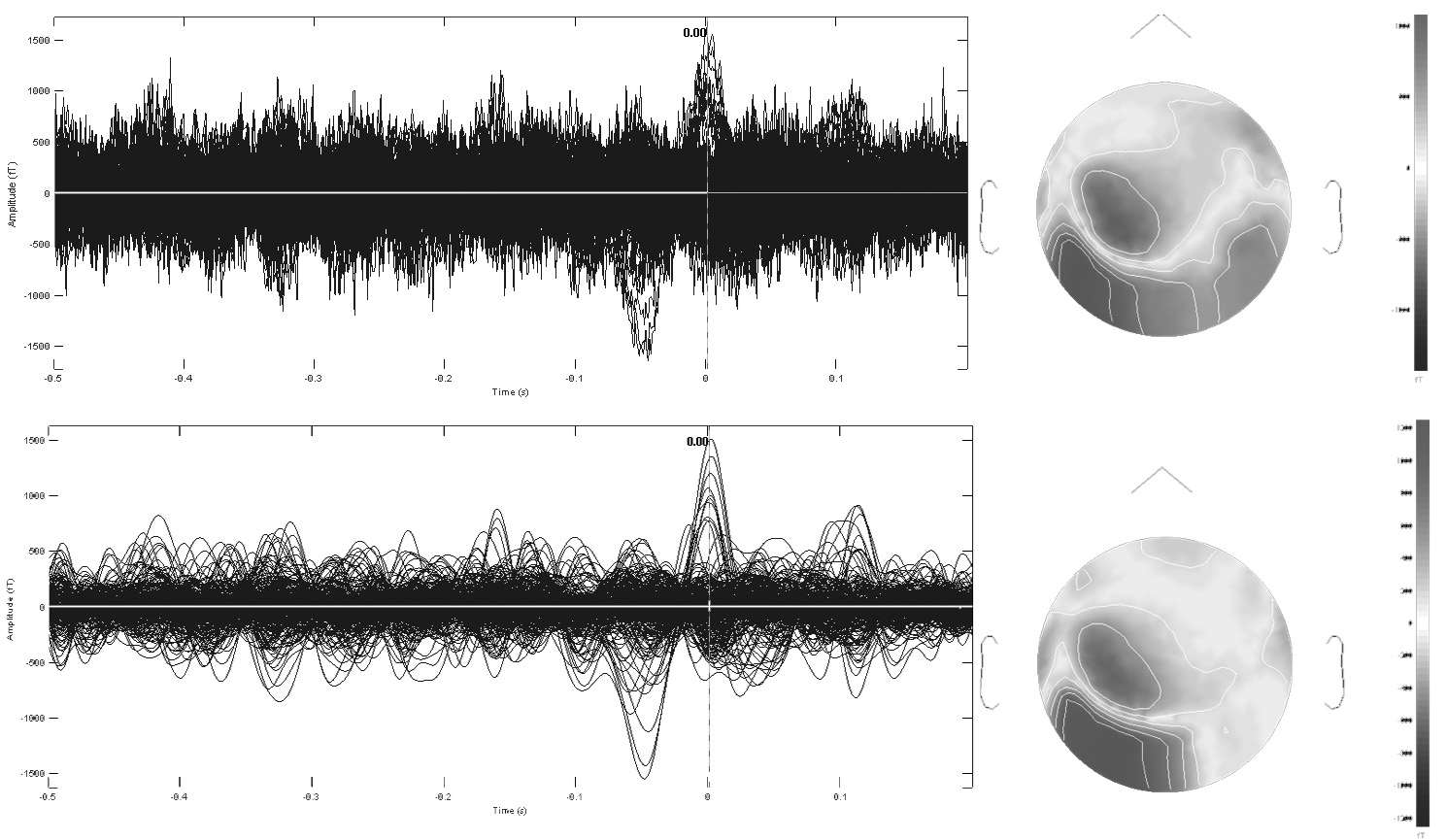

Digital band-pass filtering applied to spontaneous MEG data during an interictal epileptic spike event (total epoch of 700ms duration, sampled at 1KHz). The time series of 306 MEG sensors are displayed using a butterfly plot, whereby all waveforms are overlaid within the same axes. The top row displays the original data with digital filters applied during acquisition between 1.5 and 330Hz. The bottom row is a pre-processed version of the same data, band-passed filtered between 2 and 30Hz. Note how this version of the data better reveals the epileptic event occurring about time t=0ms. The corresponding sensor topographies of MEG measures are displayed to the right. The gray scale display represents the intensity of the magnetic field captured at each sensor location and interpolated over a flattened version of the MEG array (nose pointing upwards). Note also how digital band-pass filtering strongly alters the surface topography of the data, by revealing a simpler dipolar pattern over the left temporo-occipital areas of the array.

Despite all the precautions to obtain clean signals from EEG and MEG sensors, electrophysiological traces are likely to be contaminated by a wide variety of artifacts.

These include other sources than the brain and primarily the eyes, the heart, muscles (head or limb motion, muscular tension due to postural discomfort or fatigue), electromagnetic perturbations from other devices used in the experiment and leaking power line contamination, etc.

The key challenge is that most of these factors of nuisance contribute to MEG/EEG recordings with significantly more power than ongoing brain signals (a factor of about 50 for heartbeats, eye-blinks and movements, see Fig.3). Whether experimental trials contaminated by artifacts need to be discarded requires that these latter be properly detected in the first place.

The literature of methods for tackling noise detection, attenuation and correction is too immense to be properly covered in these pages. In a nutshell, the chances of detecting and correcting artifacts are higher when these latter are monitored by a dedicated measurement. Hence electrophysiological monitoring (ECG, EOG, EMG, etc.) is strongly encouraged in most experimental settings. Some MEG solutions use additional magnetic sensors located away from the subject’s head to capture the environmental magnetic fields inside the MSR. Adaptive filtering techniques may then be applied quite effectively (Haykin, 1996).

The resulting additional recordings may also be used as artifact templates for visual or automatic inspection of the MEG/EEG data. For steady-state perturbations, which are thought to be independent of the brain processes of interest, empirical statistics obtained from a series of representative events (e.g., eye-blinks, heartbeats) are likely to properly capture the nuisance they systematically generate in the MEG/EEG recordings. Approaches like principal or independent component analysis (PCA and ICA, respectively) have proven to be effective in that respect for both conventional MEG/EEG and simultaneous EEG/fMRI recordings (Nolte & Hämäläinen, 2001, Pérez, Guijarro, & Barcia, 2005, Delorme, Sejnowski, & Makeig, 2007, Koskinen & Vartiainen, 2009).

Modality-specific noise attenuation techniques, like signal space separation and alike (SSS), have been proposed for MEG (Taulu, Kajola, & Simola, 2004). They basically consist in designing software spatial filters that attenuate sources of nuisance that originate from outside a virtual spherical volume designed to contain the subject’s head within the MEG helmet.

Ultimately, the decision whether episodes contaminated by well-identified artifacts need to be discarded or corrected belongs to the investigator. Some scientists design their paradigms so that the number of trials is large enough that a few may be discarded without putting the analysis to jeopardy.

An enduring tradition of MEG/EEG signal analysis consists in enhancing brain responses that are evoked by a stimulus or an action, by averaging the data about each event – defined as an epoch – across trials. The underlying assumption is that there exist some consistent brain responses that are time-locked and so-called 'phase-locked' to a specific event (again e.g., the presentation of a stimulus or a motor action).

Hence, it is straightforward to enhance these responses by proceeding to epoch averaging across trials, under the assumption that the rest of the data is inconsistent in time or phase with respect to the event of interest. This simple practice has permitted a vast amount of contributions to the field of event-related potentials (in EEG, ERP) and fields (in MEG, ERF) (Handy, 2004, Niedermeyer & Silva, 2004).

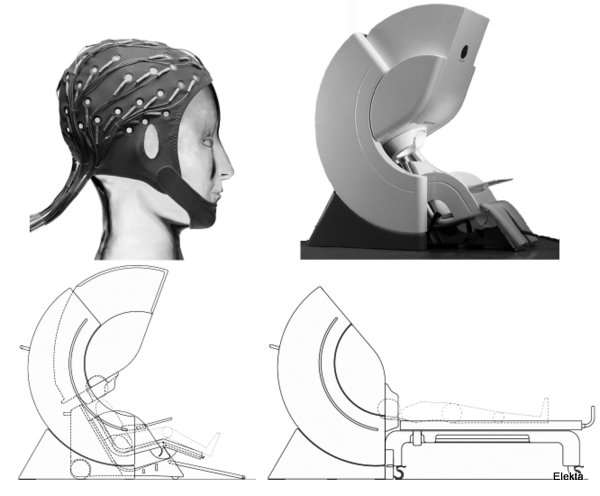

Trial averaging necessitates that epochs be defined about each event of interest (e.g. the stimulus onset, or the subject’s response, etc.). An epoch has a certain duration, usually defined with respect to the event of interest (pre and post-event). Averaging epochs across trials can be conducted for each experimental condition at the individual and the group levels. This latter practice is called ‘grand-averaging’ and has been made possible originally because electrodes are positioned on the subject’s scalp according to montages, which are defined with respect to basic, reproducible geometrical measures taken on the head. The international 10-20 system was developed as a standardized electrode positioning and naming nomenclature to allow direct comparison of studies across the EEG community (Niedermeyer & Silva, 2004). Standardization of sensor placement does not exist in the MEG community, as the sensor arrays are specific to the device being used and subject heads fit differently under the MEG helmet.

Therefore, grand or even inter-run averaging is not encouraged in MEG at the sensor level without applying movement compensation techniques, or without at least checking that limited head displacements occurred between runs. Note however that trial averaging may be performed on the source times series of the MEG or EEG generators. In this latter situation, typical geometrical normalization techniques such as those used in fMRI studies need to be applied across subjects and are now a more consistent part of the MEG/EEG analysis pipeline.

Once proper averaging has been completed, measures can be taken on ERP/ERF components. Components are defined as waveform elements that emerge from the baseline of the recordings. They may be characterized in terms of e.g., relative latency, topography, amplitude and duration with respect to baseline or a specific test condition. Once again, the ERP/ERF literature is immense and cannot be summarized in these lines. Multiple reviews and textbooks are available and describe in great details the specificity and sensitivity of event-related components.

The limits of the approach

Phase-locked ERP/ERF components capture only the part of task-related brain responses that repeat consistently in latency and phase with respect to an event. One might however question the physiological origins and relevance of such components in the framework of oscillatory cell assemblies, as a possible mechanism ruling most basic electrophysiological processes (Gray, König, Engel, & Singer, 1989, Silva, 1991, David & Friston, 2003, Vogels, Rajan, & Abbott, 2005). This has lead to a fair amount of controversy, whereby evoked components would rather be considered as artifacts of event-related, induced phase resetting of ongoing brain rhythms, mostly in the alpha frequency range ([8,12]Hz) (Makeig et al.., 2002). Under this assumption, epoch averaging would only provide a secondary and poorly specific window on brain processes: this is certainly quite severe.

Indeed, event-related amplitude modulations – hence not phase effects – of ongoing alpha rhythms have been reported as major contributors to the slower event-related components captured by ERP/ERF’s (Mazaheri & Jensen, 2008). Some authors associate these modulations of event-related amplitudes to local enhancements/reductions of event-related synchronization/desynchronization (ERS/ERD) within cell assemblies. The underlying assumption is that as the activity of more cells tends to be synchronized, the net ensemble activity will build up to an increase in signal amplitude (Pfurtscheller & Silva, 1999).

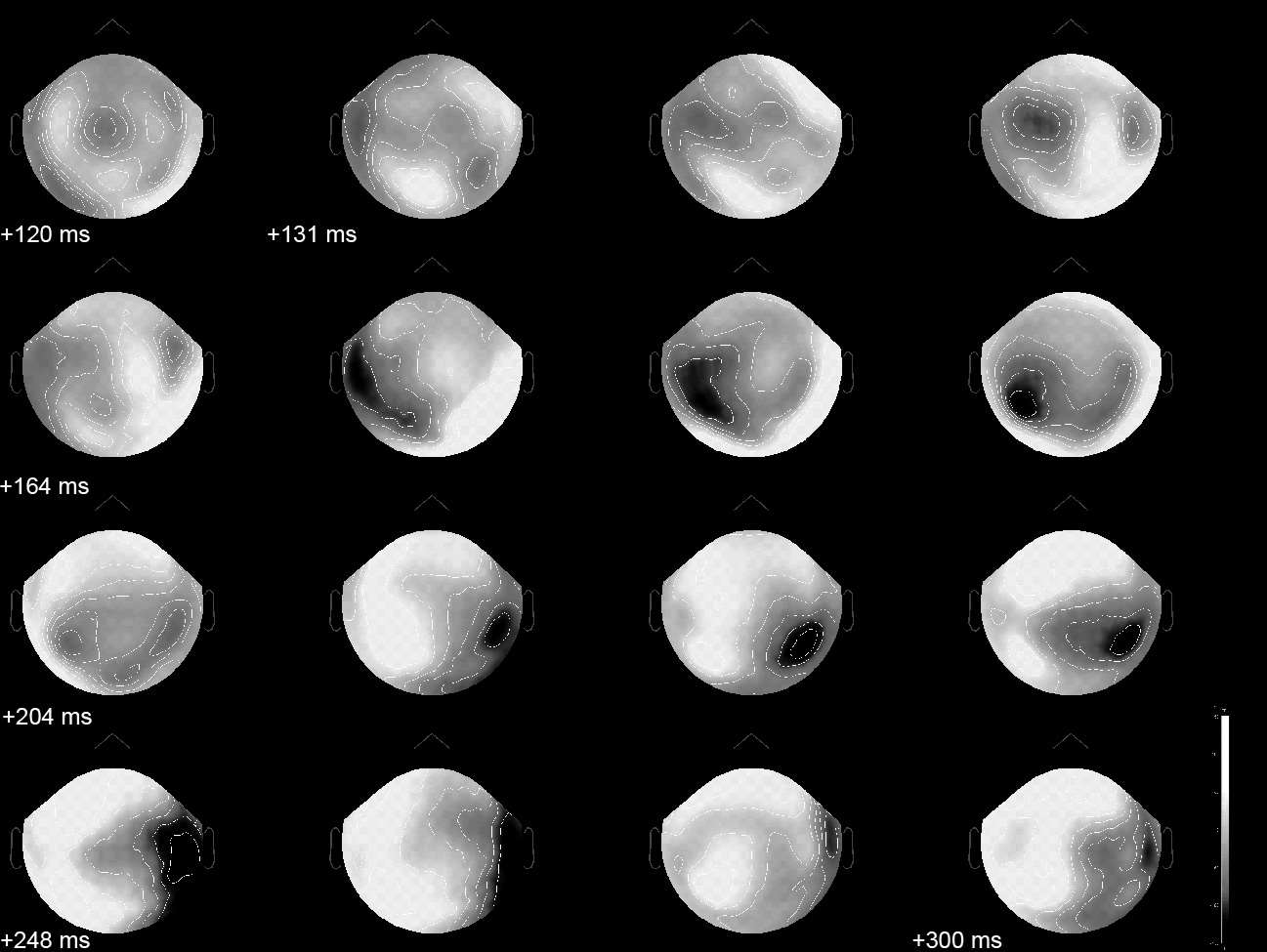

Event-related, evoked MEG surface data in a visual oddball RSVP paradigm. The data was interpolated between sensors and projected on a flattened version of the MEG channel array. Shades of gray represent the inward and outward magnetic fields picked-up outside the head during the [120,300] ms time interval following the presentation of the target face object. The spatial distribution of magnetic fields over the sensor array is usually relatively smooth and reveals some characteristic shape patterns that indicate that brain activity is rapidly changing and propagating during the time window. A much clearer insight can be provided by source imaging.

Massive event-related cell synchronization is not guaranteed to take place with consistent temporal phase with respect to the onset of the event. It is therefore relatively easy to imagine that averaging trials when such phase jitters occurs across event repetitions would lead to decreased effect sensitivity. This assumption can be further elaborated in the theoretical and experimental framework of distributed, synchronized cell assemblies during perception and cognition (Varela et al.., 2001, Tallon-Baudry, 2009).

The seminal works by Gray and Singer in cat vision have shown that synchronization of oscillatory responses of spatially distributed cell ensembles is a way to establish relations between features in different parts of the visual field (Gray et al.., 1989). These authors evidenced that these phenomena take place in the gamma range ([40,60]Hz) – i.e., a upper frequency range – of the event-elated responses. These results have been confirmed by a large number of subsequent studies in animals and implanted electrodes in humans, which all demonstrated that these event-related responses could only be captured with an approach to epoch averaging that would be robust to phase jitters across trials (Tallon-Baudry, Bertrand, Delpuech, & Permier, 1997, Rodriguez et al.., 1999).

More evidence of gamma-range brain responses detected with EEG and MEG scalp techniques are being reported as analysis techniques are being refined and distributed to a greater community of investigators (Hoogenboom, Schoffelen, Oostenveld, Parkes, & Fries, 2006). It is striking to note that as a greater number of investigations are conducted, the frequency range of gamma responses of interest is constantly expanding and now reaches between [30,100]Hz and above. As a caveat, this frequency range is also most favorable to contamination from muscle activity, such as phasic contractions or micro-saccades, which may also happen to be task-related (Yuval-Greenberg & Deouell, 2009, Melloni, Schwiedrzik, Wibral, Rodriguez, & Singer, 2009). Therefore great precautions must be brought to rule out possible confounds in that matter.

An additional interesting feature of gamma responses for neuroimagers is that there is a growing body of evidence showing that they tend to be more specifically coupled to the hemodynamics responses captured in fMRI than other components of the electrophysiological responses (Niessing et al.., 2005, Lachaux et al.., 2007, Koch, Werner, Steinbrink, Fries, & Obrig, 2009).

Because induced responses are mostly characterized by phase jitters across trials, averaging MEG/EEG traces in the time domain would be detrimental to the extraction of induced signals from the ongoing brain activity (David & Friston, 2003). A typical approach to the detection of induced components once again builds on the hypothesis of systematic emission of event-related oscillatory bursts limited in time duration and frequency range. Time-frequency decomposition (TFD) is a methodology of choice in that respect, as it proceeds to the estimation of instantaneous power in the time-frequency domain of time series. TFD is insensitive to variations of the signal phase when computing the average signal power across trials. TFD is a very active field of signal processing and one of the core tools for TFD is wavelet signal decomposition. Wavelets feature the possibility to perform the spectral analysis of non-stationary signals, which spectral properties and contents are evolving with time (Mallat, 1998). This is typical of phasic electrophysiological responses for which Fourier spectral analysis is not adequate because it is based on signal stationarity assumptions (Kay, 1988).

Hence, even though the typical statistics of induced MEG/EEG signal analysis is the trial mean (i.e. sample average), it is performed with a different measure: the estimation of short-term signal power, decomposed in time and frequency bins. Several academic and commercial software solutions are now available to perform such analysis (and the associated inference statistics) on electrophysiological signals.

The analysis of brain connectivity is a rapidly evolving field of Neuroscience, with significant contributions from new neuroimaging techniques and methods (Bandettini, 2009). While structural and functional connectivity has been emphasized with MRI-based techniques (Johansen-Berg & Rushworth, 2009, K. Friston, 2009), the time resolution of MEG/EEG offers a unique perspective on the mechanisms of rapid neural connectivity engaging cell assemblies at multiple temporal and spatial scales.

We may summarize the research taking place in that field by mentioning two approaches that have developed somewhat distinctly in the recent years, though we might predict they will ultimately converge with forthcoming research efforts. We shall note that most of the methods summarized below are also applicable to the analysis of MEG/EEG source connectivity and are not restricted to the analysis of sensor data. We further emphasize that connectivity analysis is easily fooled by confounds in the data, such as volume conduction effects – i.e., smearing of scalp MEG/EEG data due to the distance from brain sources to sensors and the conductivity properties of head tissues, as we shall discuss below – which need to be carefully evaluated in the course of the analysis (Nunez et al.., 1997, Marzetti, Gratta, & Nolte, 2008).

Synchronized cell assemblies

The first strategy has inherited directly from the compelling intracerebral recording results demonstrating that cell synchronization is a central feature of neural communication (Gray et al.., 1989). Signal analysis techniques dedicated to the estimation of signal interdependencies in the broad sense have been largely applied to MEG/EEG sensor traces. Contrarily to what is appropriate to the analysis of fMRI’s slow hemodynamics, simple correlation measures in the time domain are thought not to be able to capture the specificity of electrophysiological signals, which components are defined over a fairly large frequency spectrum. Coherence measures are certainly amongst the techniques the most investigated in MEG/EEG, because they are designed to be sensitive to simultaneous variations of power that are specific to each frequency bin of the signal spectrum (Nunez et al.., 1997). There is however a competitive assumption that neural signals may synchronize their phases, without the necessity of simultaneous, increased power modulation (Varela et al.., 2001). Wavelet-based techniques have therefore been developed to detect episodes of phase synchronization between signals (Lachaux, Rodriguez, Martinerie, & Varela, 1999, Rodriguez et al.., 1999).

Causality

Connectivity analysis has also been recently studied through the concept of causality, whereby some neural regions would influence others in a non-symmetric, directed fashion (Gourévitch, Bouquin-Jeannès, & Faucon, 2006). The possibilities to investigate directed influence between not only pairs, but larger sets of time series (i.e. MEG/EEG sensors or brain regions) are vast and are therefore usually ruled by parametric models. These latter may either be related to the definition of the time series (i.e. through auto-regressive modeling for Granger-causality assessment (Lin et al.., 2009)), or to the very underlying structure of the connectivity between neural assemblies (i.e., through structural equation modeling (Astolfi et al.., 2005) and dynamic causal modeling (David et al.., 2006, Kiebel, Garrido, Moran, & Friston, 2008)

The second approach to connectivity analysis pertains to the emergence of complex networks studies and associated methodology.

Complexity in brain networks

Complex networks science is a recent branch of applied mathematics that provides quantitative tools to identify and characterize patterns of organization among large inter-connected networks such as the Internet, air transportation systems, mobile telecommunication. In neuroscience, this strategy rather concerns the identification of global characteristics of connectivity within the full array of brain signals captured at the sensor or source levels. With this methodology, the concept of the brain connectome has recently emerged, and encompasses new challenges for integrative neurosciences and the technology, methodology and tools involved in neuroimaging, to better embrace spatially-distributed dynamical neural processes at multiple spatial and temporal scales (Sporns, Tononi, & Kötter, 2005, Deco, Jirsa, Robinson, Breakspear, & Friston, 2008). From the operational standpoint, brain ‘connectomics’ is contributing both to theoretical and computational models of the brain as a complex system (Honey, Kötter, Breakspear, & Sporns, 2007, Izhikevich & Edelman, 2008), and experimentally, by suggesting new indices and metrics – such as nodes, hubs, efficiency, modularity, etc. – to characterize and scale the functional organization of the healthy and diseased brain (Bassett & Bullmore, 2009). This type of approaches is very promising, and calls for large-scale validation and maturation to connect with the well-explored realm of basic electrophysiological phenomena.

Electromagnetic Neural Source Imaging

From a methodological standpoint, MEG/EEG source modeling is referred to as an ‘inverse problem’, an ubiquitous concept, well-known to physicists and mathematicians in a wide variety of scientific fields: from medical imaging to geophysics and particle physics (Tarantola, 2004). The inverse problem framework helps conceptualize and formalize the fact that, in experimental sciences, models are confronted to observations to draw specific scientific conclusions and/or estimate some parameters that were originally unknown. Parameters are quantities that might be changed without fundamentally violating and thereby invalidating the theoretical model. Predicting observations from a model with a given set of parameters is called solving the forward modeling problem. The reciprocal situation where observations are used to estimate the values of some model parameters is the inverse modeling problem.

In the context of brain functional imaging in general, and MEG/EEG in particular, we are essentially interested in identifying the neural sources of external signals observed outside the head (non invasively). These sources are defined by their locations in the brain and their amplitude variations in time. These are the essential unknown parameters that MEG/EEG source estimation will reveal, which is a typical incarnation of an inverse modeling problem.

Forward modeling in the context of MEG/EEG consists in predicting the electromagnetic fields and potentials generated by any arbitrary source model, that is, for any location, orientation and amplitude parameter values of the neural currents. In general, MEG/EEG forward modeling considers that some parameters are known and fixed: the geometry of the head, conductivity of tissues, sensor locations, etc. This will be discussed in the next section.

As an illustration, take a single current dipole as a model for the global activity of the brain at a specific latency of an MEG averaged evoked response. We might choose to let the dipole location, orientation and amplitude as the set of free parameters to be inferred from the sensor observations. We need to specify some parameters to solve the forward modeling problem consisting in predicting how a single current dipole generates magnetic fields on the sensor array in question. We might therefore choose to specify that the head geometry will be approximated as a single sphere, with its center at some given coordinates.

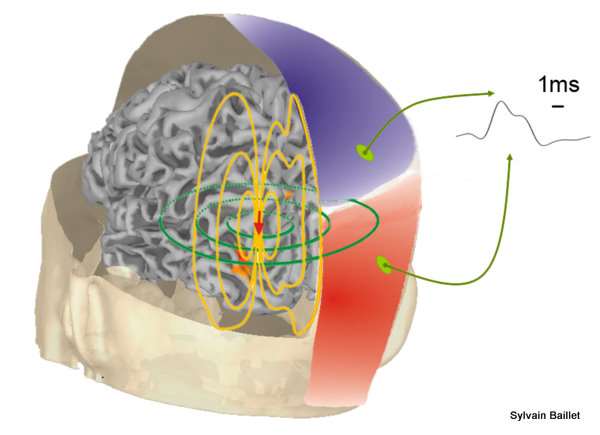

Modeling illustrated: (a) Some unknown brain activity generates variations of magnetic fields and electric potentials at the surface of the scalp. This is illustrated by time series representing measurements at each sensor lead. (b) Modeling of the sources and of the physics of MEG and EEG. As naively represented here, forward modeling consists of a simplification of the complex geometry and electromagnetic properties of head tissues. Source models are presented with colored arrow heads. Their free parameters – e.g., location, orientation and amplitude – are adjusted during the inverse modeling procedure to optimize some quantitative index. This is illustrated here in (c), where the residuals – i.e., the absolute difference between the original data and the measures predicted by a source model – are minimized.

A fundamental principle is that, whereas the forward problem has a unique solution in classical physics (as dictated by the causality principle), the inverse problem might accept multiple solutions, which are models that equivalently predict the observations.

In MEG and EEG, the situation is critical: It has been demonstrated theoretically by von Helmholz back in the 19th century that the general inverse problem that consists in finding the sources of electromagnetic fields outside a volume conductor has an infinite number of solutions. This issue of non-uniqueness is not specific to MEG/EEG: geophysicists for instance are also confronted to non-uniqueness in trying to determine the distribution of mass inside a planet by measuring its external gravity field the globe. Hence theoretically, an infinite number of source models equivalently fits any MEG and EEG observations, which would make them poor techniques for scientific investigations. Fortunately, this question has been addressed with the mathematics of ill-posedness and inverse modeling, which formalize the necessity of bringing additional contextual information to complement a basic theoretical model.

Hence the inverse problem is a true modeling problem. This has both philosophical and technical impacts on approaching the general theory and the practice of inverse problems (Tarantola, 2004). For instance, it will be important to obtain measures of uncertainty on the estimated values of the model parameters. Indeed, we want to avoid situations where a large set of values for some of the parameters produce models that equivalently account for the experimental observations. If such situation arises, it is important to be able to question the quality of the experimental data and maybe, falsify the theoretical model.

The non-uniqueness of the solution is a situation where an inverse problem is said to be ill-posed. In the reciprocal situation where there is no value for the system’s parameters to account for the observations, the data are said to be inconsistent (with the model). Another critical situation of ill-posedness is when the model parameters do not depend continuously on the data. This means that even tiny changes on the observations (e.g., by adding a small amount of noise) trigger major variations in the estimated values of the model parameters. This is critical to any experimental situations, and in MEG/EEG in particular, where estimated brain source amplitudes are sought not to ‘jump’ dramatically from millisecond to millisecond.

The epistemology and early mathematics of ill-posedness have been paved by Jacques Hadamard in (Hadamard, 1902), where he somehow radically stated that problems that are not uniquely solvable are of no interest whatsoever. This statement is obviously unfair to important questions in science such as gravitometry, the backwards heat equation and surely MEG/EEG source modeling.

The modern view on the mathematical treatment of ill-posed problems has been initiated in the 1960’s by Andrei N. Tikhonov and the introduction of the concept of regularization, which spectacularly formalized a Solution of ill-posed problems (Tikhonov & Arsenin, 1977). Tikhonov suggested that some mathematical manipulations on the expression of ill-posed problems could make them turn well-posed in the sense that a solution would exist and possibly be unique. More recently, this approach found a more general and intuitive framework using the theory of probability, which naturally refers to the uncertainty and contextual a priori inherent to experimental sciences (see e.g., (Tarantola, 2004).

As of 2010, more than 2000 journal articles referred in the U.S. National Library of Medicine publication database to the query ‘(MEG OR EEG) AND source’. This abundant literature may be considered ironically as only a small sample of the infinite number of solutions to the problem, but it is rather a reflection of the many different ways MEG/EEG source modeling can be addressed by considering additional information of various nature.

Such a large amount of reports on a single, technical issue has certainly been detrimental to the visibility and credibility of MEG/EEG as a brain mapping technique within the larger functional brain mapping audience, where the fMRI inverse problem is reduced to the well-posed estimation of the BOLD signal (though it is subject to major detection issues).

Today, it seems that a reasonable degree of technical maturity has been reached by electromagnetic brain imaging using MEG and/or EEG. All methods reduce to only a handful of classes of approaches, which are now well-identified. Methodological research in MEG/EEG source modeling is now moving from the development of inverse estimation techniques, to statistical appraisal and the identification of functional connectivity. In these respects, it is now joining the concerns shared by other functional brain imaging communities (Salmelin & Baillet, 2009).

MEG/EEG forward modeling requires two basic models that are bound to work together in a complementary manner:

- A physical model of neural sources, and

- A model that predicts how these sources generate electromagnetic fields outside the head.

The canonical source model of the net primary intracellular currents within a neural assembly is the electric current dipole. The adequacy of a simple, equivalent current dipole (ECD) model as a building block of cortical current distributions was originally motivated by the shape of the scalp topography of MEG/EEG evoked activity observed. This latter consists essentially of (multiple) so-called ‘dipolar distributions’ of inward/outward magnetic fields and positive/negative electrical potentials. From a historical standpoint, dipole modeling applied to EEG and MEG surface data was a spin-off from the considerable research on quantitative electrocardiography, where dipolar field patterns are also omnipresent, and where the concept of ECD was contributed as early as in the 1960s (Geselowitz, 1964).

However, although cardiac electrophysiology is well captured by a simple ECD model because there is not much questioning about source localization, the temporal dynamics and spatial complexity of brain activity may be more challenging. Alternatives to the ECD model exist in terms of the compact, parametric representation of distributed source currents. They consist either of higher-order source models called multipoles (Jerbi, Mosher, Baillet, & Leahy, 2002, Jerbi et al.., 2004) – also derived from cardiographic research (Karp, Katila, Saarinen, Siltanen, & Varpula, 1980) – or densely-distributed source models (Wang, Williamson, & Kaufman, 1992). In the latter case, a large number of ECD’s are distributed in the entire brain volume or on the cortical surface, thereby forming a dense grid of elementary sites of activity, which intensity distribution is determined from the data.

To understand how these elementary source models generate signals that are measurable using external sensors, further modeling is required for the geometrical and electromagnetic properties of head tissues, and the properties of the sensor array.

The details of the sensor geometry and pick-up technology are dependent on the manufacturer of the array. We may however summarize some fundamental principles in the next following lines.

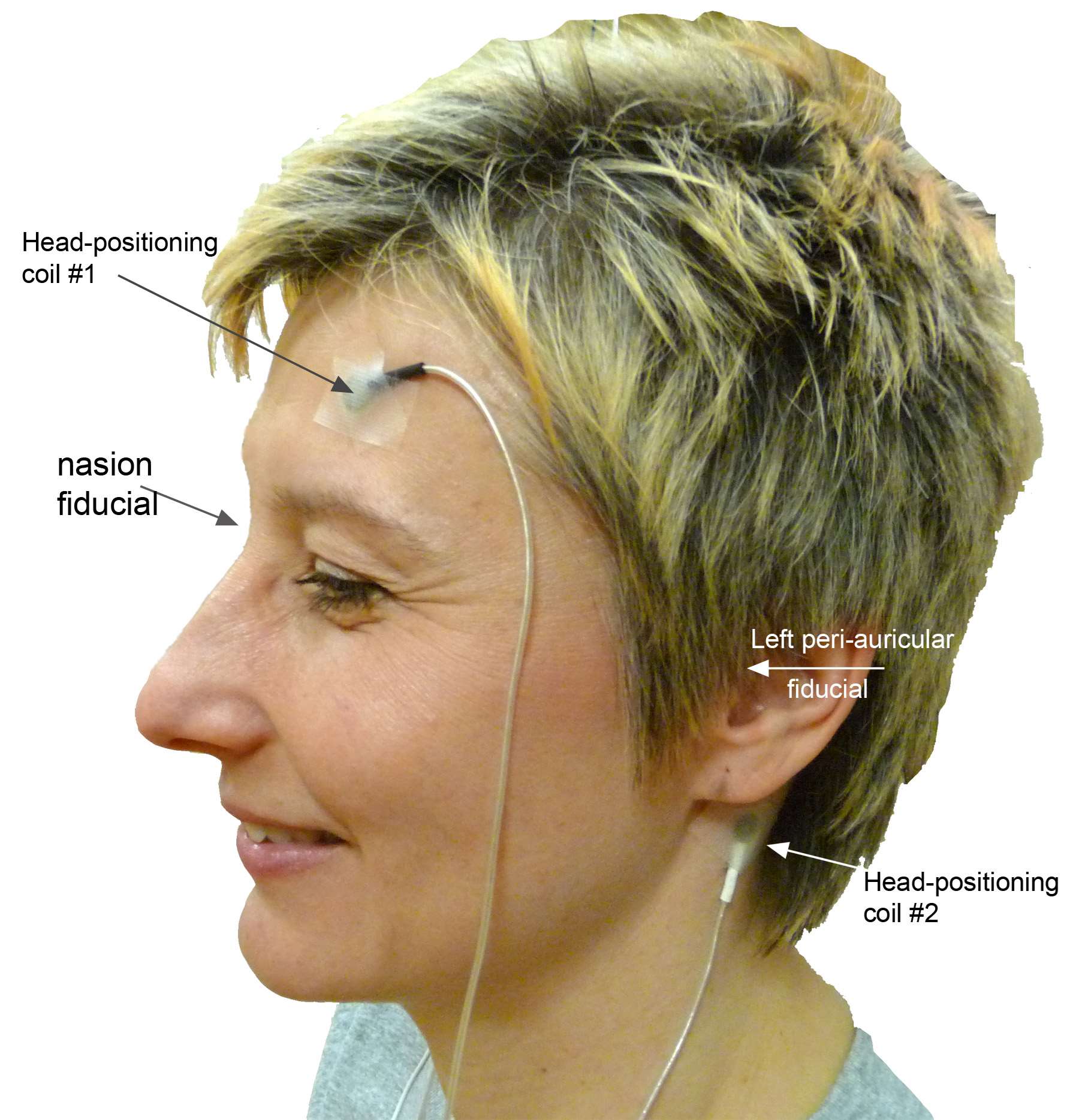

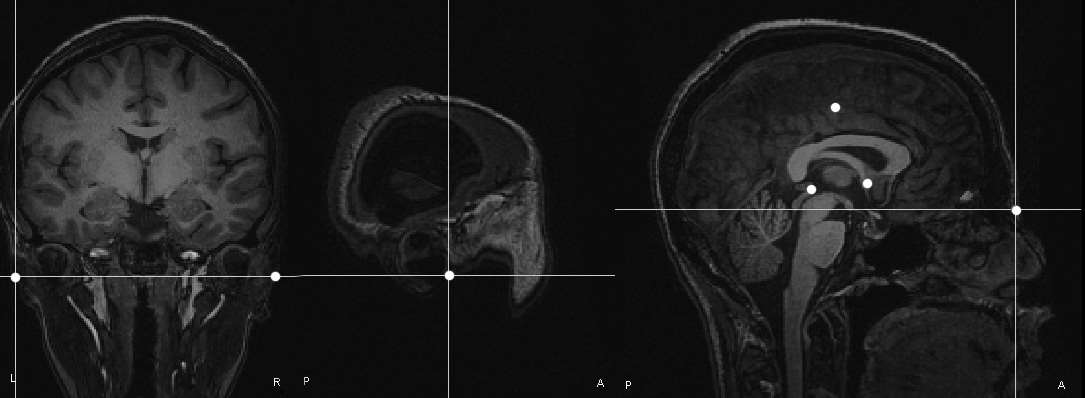

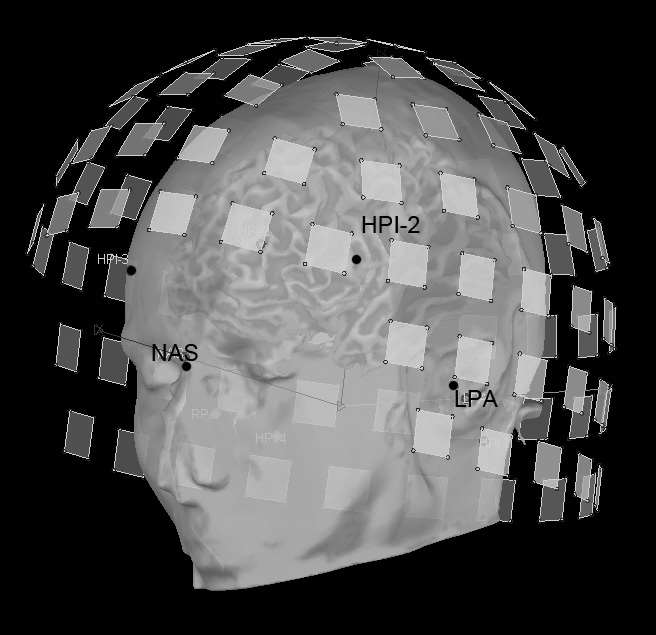

We have already reviewed how the sensor locations can be measured with state-of-the-art MEG and EEG equipment. If this information is missing, sensor locations may be roughly approximated from montage templates, but this will be detrimental to the accuracy of the source estimates (Schwartz, Poiseau, Lemoine, & Barillot, 1996). This is critical with MEG, as the subject is relatively free to position his/her head within the sensor array. Typical 10/20 EEG montages offer less degrees of freedom in that respect. Careful consideration of this geometrical registration issue using the solutions discussed above (HPI, head digitization and anatomical fiducials) should provide satisfactory performances in terms of accuracy and robustness.

In EEG, the geometry of electrodes is considered as point-like. Advanced electrode modeling should include the true shape of the sensor (that is, a ‘flat’ cylinder), but it is generally acknowledged that the spatial resolution of EEG measures is coarse enough to neglect this factor. One important piece of information however is the location of the reference electrode – i.e., nasion, central, linked mastoids, etc. – as it defines the physics of a given set of EEG measures. If this information is missing, the EEG data can be re-referenced with respect to the instantaneous arithmetic average potential (Niedermeyer & Silva, 2004).

In MEG, the sensing coils may also be considered point-like as a first approximation, though some analysis software packages include the exact sensor geometry in modeling. The computation of the total magnetic flux induction captured by the MEG sensors can be more accurately modeled by the geometric integration within their surface area. Gradiometer arrangements are readily modeled by applying the arithmetic operation they mimic, combining the fields modeled at each of its magnetometers.

Recent MEG systems include sophisticated online noise-attenuation techniques such as: higher-order gradient corrections and signal space projections. They contribute significantly to the basic model of data formation and therefore need to be taken into account (Nolte & Curio, 1999).

Predicting the electromagnetic fields produced by an elementary source model at a given sensor array requires another modeling step, which concerns a large part of the MEG/EEG literature. Indeed, MEG/EEG ‘head modeling’ studies the influence of the head geometry and electromagnetic properties of head tissues on the magnetic fields and electrical potentials measured outside the head.

Given a model of neural currents, the physics of MEG/EEG are ruled by the theory of electrodynamics (Feynman, 1964), which reduces in MEG to Maxwell’s equations, and to Ohm’s law in EEG, under quasistatic assumptions. These latter consider that the propagation delay of the electromagnetic waves from brain sources to the MEG/EEG sensors is negligible. The reason is the relative proximity of MEG/EEG sensors to the brain with respect to the expected frequency range of neural sources (up to 1KHz) (Hämäläinen et al., 1993). This is a very important, simplifying assumption, which has immediate consequences on the computational aspects of MEG/EEG head modeling.

Indeed, the equations of electro and magnetostatics determine that there exist analytical, closed-form solutions to MEG/EEG head modeling when the head geometry is considered as spherical. Hence, the simplest, and consequently by far most popular model of head geometry in MEG/EEG consists of concentric spherical layers: with one sphere per major category of head tissue (scalp, skull, cerebrospinal fluid and brain).

The spherical head geometry has further attractive properties for MEG in particular. Quite remarkably indeed, spherical MEG head models are insensitive to the number of shells and their respective conductivity: a source within a single homogeneous sphere generates the same MEG fields as when located inside a multilayered set of concentric spheres with different conductivities. The reason for this is that conductivity only influences the distribution of secondary, volume currents that circulate within the head volume and which are impressed by the original primary neural currents. The analytic formulation of Maxwell’s equations in the spherical geometry shows that these secondary currents do not generate any magnetic field outside the volume conductor (Sarvas, 1987). Therefore in MEG, only the location of the center of the spherical head geometry matters. The respective conductivity and radius of the spherical layers have no influence on the measured MEG fields. This is not the case in EEG, where both the location, radii and respective conductivity of each spherical shell influence the surface electrical potentials.

This relative sensitivity to tissue conductivity values is a general, important difference between EEG and MEG.

A spherical head model can be optimally adjusted to the head geometry, or restricted to regions of interest e.g., parieto-occipital regions for visual studies. Geometrical registration to MRI anatomical data improves the adjustment of the best-fitting sphere geometry to an individual head.

Another remarkable consequence of the spherical symmetry is that radially oriented brain currents produce no magnetic field outside a spherically symmetric volume conductor. For this reason, MEG signals from currents generated within the gyral crest or sulcal depth are attenuated, with respect to those generated by currents flowing perpendicularly to the sulcal walls. This is another important contrast between MEG and EEG’s respective sensitivity to source orientation (Hillebrand & Barnes, 2002).

Finally, the amplitude of magnetic fields decreases faster than electrical potentials’ with the distance from the generators to the sensors. Hence it has been argued that MEG is less sensitive to mesial and subcortical brain structures than EEG. Experimental and modeling efforts have shown however that MEG can detect neural activity from deeper brain regions (Tesche, 1996, Attal et al.., 2009).

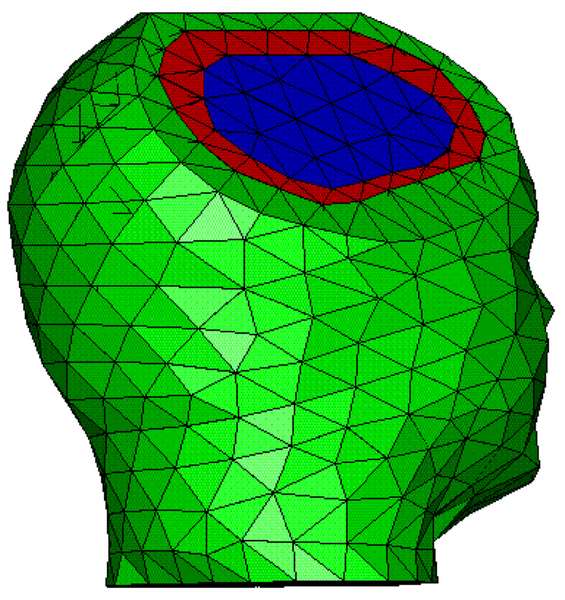

Though spherical head models are convenient, they are poor approximations of the human head shape, which has some influence on the accuracy of MEG/EEG source estimation (Fuchs, Drenckhahn, Wischmann, & Wagner, 1998). More realistic head geometries have been investigated and all require solving Maxwell’s equations using numerical methods. Boundary Element (BEM) and Finite Element (FEM) methods are generic numerical approaches to the resolution of continuous equations over discrete space. In MEG/EEG, geometric tessellations of the different envelopes forming the head tissues need to be extracted from the individual MRI volume data to yield a realistic approximation of their geometry.

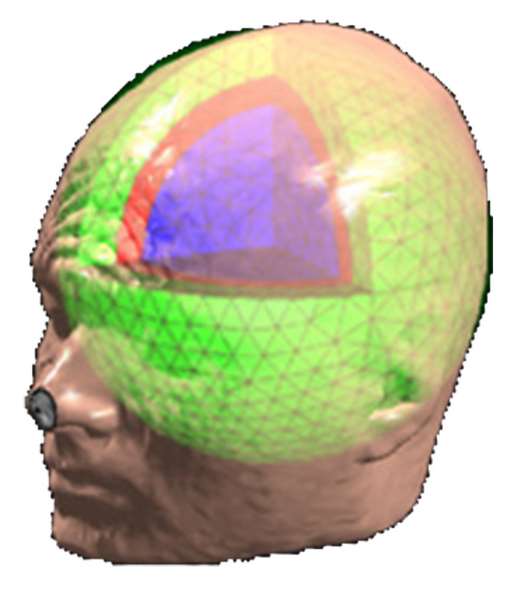

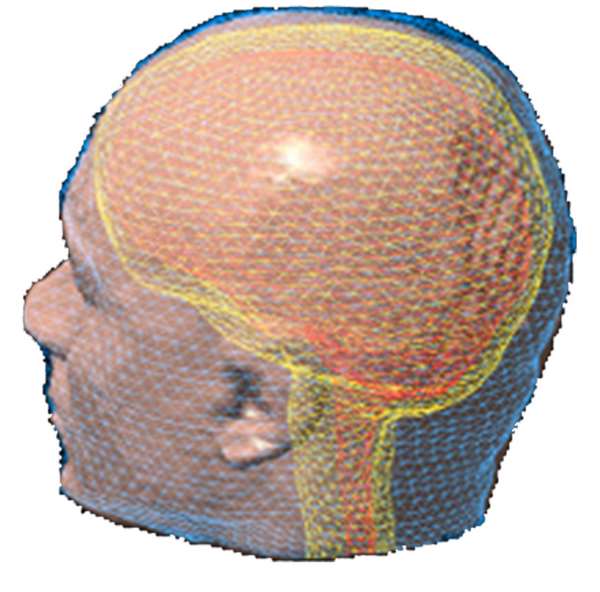

Three approaches to MEG/EEG head modeling: (a) Spherical approximation of the geometry of head tissues, with analytical solution to Maxwell’s and Ohm’s equations; (b) Tessellated surface envelopes of head tissues obtained from the segmentation of MRI data; (c) An alternative to (b) using volume meshes – here built from tetrahedra. In both (b) and (c) Maxwell’s and Ohm’s equations need to be solved using numerical methods: BEM and FEM, respectively.

In BEM, the conductivity of tissues is supposed to be homogeneous and isotropic within each envelope. Therefore, each tissue envelope is delimited using surface boundaries defined over a triangulation of each of the segmented envelopes obtained from MRI.

FEM assumes that tissue conductivity may be anisotropic (such as the skull bone and the white matter), therefore the primary geometric element needs to be an elementary volume, such as a tetrahedron (Marin, Guerin, Baillet, Garnero, & Meunier, 1998).

The main obstacle to a routine usage of BEM, and more pregnantly of FEM, is the surface or volume tessellation phase. Because the head geometry is intricate and not always well-defined from conventional MRI due to signal drop-outs and artifacts, automatic segmentation tools sometimes fail to identify some important tissue structures. The skull bone for instance, is invisible on conventional T1-weighted MRI. Some image processing techniques however can estimate the shape of the skull envelope from high-quality T1-weighted MRI data (Dogdas, Shattuck, & Leahy, 2005). However, the skull bone is a highly anisotropic structure, which is difficult to model from MRI data. Recent progress using MRI diffusion-tensor imaging (DTI) helps reveal the orientation of major white fiber bundles, which is also a major source of conductivity anisotropy (Haueisen et al.., 2002).

Computation times for BEM and FEM remain extremely long (several hours on a conventional workstation), and are detrimental to rapid access to source localization following data acquisition. Both algorithmic (Huang, Mosher, & Leahy, 1999, Kybic, Clerc, Faugeras, Keriven, & Papadopoulo, 2005) and pragmatic (Ermer, Mosher, Baillet, & Leah, 2001, Darvas, Ermer, Mosher, & Leahy, 2006) solutions to this problem have however been proposed to make realistic head models more operational. They are available in some academic software packages.

Finally, let us close this section with an important caveat: Realistic head modeling is bound to the correct estimation of tissues conductivity values. Though solutions for impedance tomography using MRI (Tuch, Wedeen, Dale, George, & Belliveau, 2001) and EEG (Goncalves et al.., 2003) have been suggested, they remain to be matured before entering the daily practice of MEG/EEG. So far, conductivity values from ex-vivo studies are conventionally integrated in most spherical and realistic head models (Geddes & Baker, 1967).

Throughout these pages, we have stumbled into many pitfalls imposed by the ill-posed nature of the MEG/EEG source estimation problem. We have tried to give a pragmatic point of view on these difficulties.

It is indeed quite striking that despite all these shortcomings, MEG/EEG source analysis might reveal exquisite relative spatial resolution when localization approaches are used appropriately, and – though being of relative poor absolute spatial resolution – imaging models help the researchers tell a story on the cascade of brain events that have been occurring in controlled experimental conditions. From one millisecond to the next, imaging models are able to reveal tiny alterations in the topography of brain activations at the scale of a few millimeters.

An increasing number of groups from other neuroimaging modalities have come to realize that beyond mere cartography, temporal and oscillatory brain responses are essential keys to the understanding and interpretation of the basic mechanisms ruling information processing amongst neural assemblies. The growing number of EEG systems installed in MR magnets and the steady increase in MEG equipments demonstrate an active and dynamic scientific community, with exciting perspectives for the future of multidisciplinary brain research.

MEG/EEG Source Modeling for Localization and Imaging of Brain Activity

The localization approach to MEG/EEG source estimation considers that brain activity at any time instant is generated by a relatively small number (a handful, at most) of brain regions. Each source is therefore represented by an elementary model, such as an ECD, that captures local distributions of neural currents. Ultimately, each elementary source is back projected or constrained to the subject’s brain volume or an MRI anatomical template, for further interpretation. In a nutshell, localization models are essentially compact, in terms of number of generators involved and their surface extension (from point-like to small cortical surface patches).

The alternative imaging approaches to MEG/EEG source modeling were originally inspired by the plethoric research in image restoration and reconstruction in other domains (early digital imaging, geophysics, and other biomedical imaging techniques). The resulting image source models do not yield small sets of local elementary models but rather the distribution of ‘all’ neural currents. This results in stacks of images where brain currents are estimated wherever elementary current sources had been previously positioned. This is typically achieved using a dense grid of current dipoles over the entire brain volume or limited to the cortical gray matter surface. These dipoles are fixed in location and generally, orientation, and are homologous to pixels in a digital image. The imaging procedure proceeds to the estimation of the amplitudes of all these elementary currents at once. Hence contrarily to the localization model, there is no intrinsic sense of distinct, active source regions per se. Explicit identification of activity issued from discrete brain regions usually necessitates complementary analysis, such as empirical or inference-driven amplitude thresholding, to discard elementary sources of non-significant contribution according to the statistical appraisal. In that respect, MEG/EEG source images are very similar in essence to the activation maps obtained in fMRI, with the benefit of time resolution however.

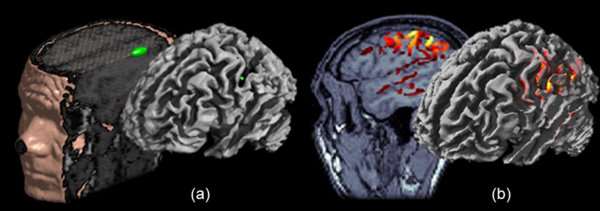

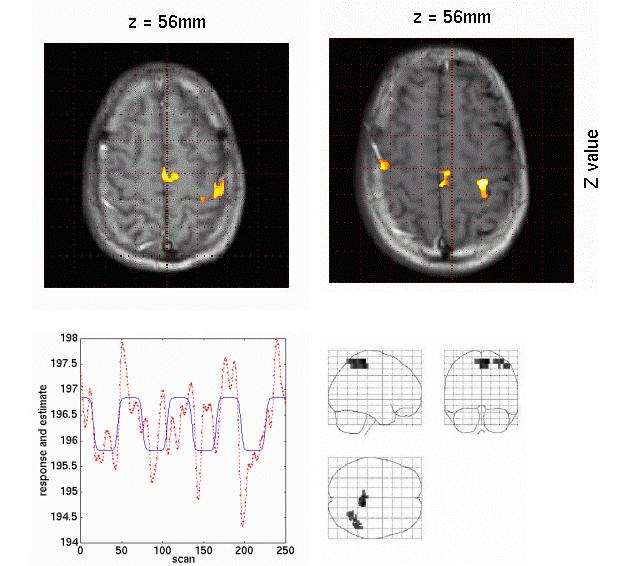

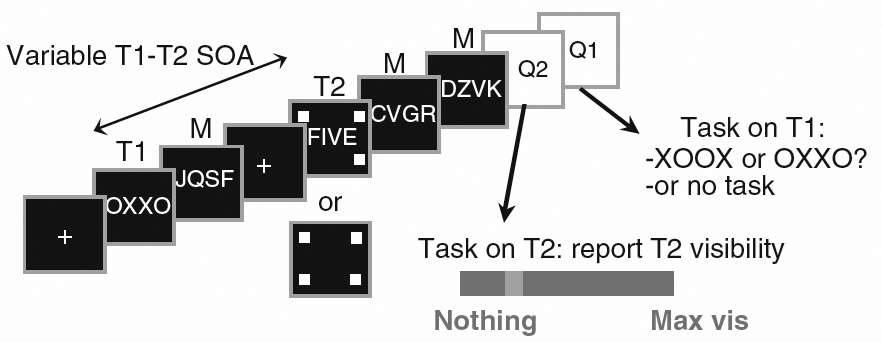

Inverse modeling: the localization (a) vs. imaging (b) approaches. Source modeling through localization consists in decomposing the MEG/EEG generators in a handful of elementary source contributions; the simplest source model in this situation being the equivalent current dipole (ECD). This is illustrated here from experimental data testing the somatotopic organization of primary cortical representations of hand fingers. The parameters of the single ECD have been adjusted on the [20, 40] ms time window following stimulus onset. The ECD was found to localize along the contralateral central sulcus as revealed from the 3D rendering obtained after the source location has been registered to the individual anatomy. In the imaging approach, the source model is spatially-distributed using a large number of ECD’s. Here, a surface model of MEG/EEG generators was constrained to the individual brain surface extracted from T1-weighted MR images. Elemental source amplitudes are interpolated onto the cortex, which yields an image-like distribution of the amplitudes of cortical currents.

Early quantitative source localization research in electro and magnetocardiography had promoted the equivalent current dipole as a generic model of massive electrophysiological activity. Before efficient estimation techniques and software were available, electrophysiologists would empirically solve the MEG/EEG forward and inverse problems to characterize the neural generators responsible for experimental effects detected on the scalp sensors.

This approach is exemplified in (Wood, Cohen, Cuffin, Yarita, & Allison, 1985), where terms such as ‘waveform morphology’ and ‘shape of scalp topography’ are used to discuss the respective sources of MEG and EEG signals. This empirical approach to localization has considerably benefited from the constant increase in the number of sensors of MEG and EEG systems.

Indeed, surface interpolation techniques of sensor data have gained considerable popularity in MEG and EEG research (Perrin, Pernier, Bertrand, Giard, & Echallier, 1987): investigators now can routinely access surface representations of their data on an approximation of the scalp surface – as a disc, a sphere – or on the very head surface extracted from the subject’s MRI. (Wood et al.., 1985) – like many others – used the distance between the minimum and maximum magnetic distribution of the dipolar-looking field topography to infer the putative depth of a dipolar source model of the data.

Computational approaches to source localization attempt to mimic the talent of electrophysiologists, with a more quantitative benefit though. We have seen that the current dipole model has been adopted as the canonical equivalent generator of the electrical activity of a brain region considered as a functional entity. Localizing a current dipole in the head implies that 6 unknown parameters be estimated from the data:

- 3 for location per se,

- 2 for orientation and

- 1 for amplitude.

Therefore, characterizing the source model by a restricted number of parameters was considered as a possible solution to the ill-posed inverse problem and has been attractive to many MEG/EEG scientists. Without additional prior information besides the experimental data, the number of unknowns in the source estimation problem needs to be smaller than that of the instantaneous observations for the inverse problem to be well-posed, in terms of uniqueness of a solution. Therefore, recent high-density systems with about 300 sensors would theoretically allow the unambiguous identification of 50 dipolar sources; a number that would probably satisfy the modeling of brain activity in many neuroscience questions.

It appears however, that most research studies using MEG/EEG source localization bear a more conservative profile, using much fewer dipole sources (typically <5). The reasons for this are both technical and proper to MEG/EEG brain signals as we shall now discuss.

Numerical approaches to the estimation of unknown source parameters are generally based on the widely-used least-squares (LS) technique which attempts to find the set of parameter values that minimize the (square of the) difference between observations and predictions from the model (Fig. 9). Biosignals such as MEG/EEG traces are naturally contaminated by nuisance components (e.g., environmental noise and physiological artifacts), which shall not be explained by the model of brain activity. These components however, contribute to some uncertainty on the estimation of the source model parameters. As a toy example, let us consider noise components that are independent and identically-distributed on all 300 sensors. One would theoretically need to adjust as many additional free parameters in the inverse model as the number of noise components to fully account for all possible experimental (noisy) observations. However, we would end up handling a problem with 300 additional unknowns, adding to the original 300 source parameters, with only 300 data measures available.

Hence, and to avoid confusion between contributions from nuisances and signals of true interest, the MEG/EEG scientist needs to determine the respective parts of interest (the signal) versus perturbation (noise) in the experimental data. The preprocessing steps we have reviewed in the earlier sections of this chapter are therefore essential to identify, attenuate or reject some of the nuisances in the data, prior to proceeding to inverse modeling.

Once the data has been preprocessed, the basic LS approach to source estimation aims at minimizing the deviation of the model predictions from the data: that is, the part in the observations that are left unexplained by the source model.

Let us suppose for the sake of further demonstration that the data is idealistically clean from any noisy disturbance, and that we are still willing to fit 50 dipoles to 300 data points. This is in theory an ideal case where there are as many unknowns as there are instantaneous data measures. However we shall discuss that unknowns in the models do not all share the same type of dependency to the data. In the case of a dipole model, doubling the amplitude of the dipole doubles the amplitude of the sensor data. Dipole source amplitudes are therefore said to be linear parameters of the model. Dipole locations however do not depend linearly on the data: the amplitude of the sensor data is altered non-linearly with changes in depth and position of the elementary dipole source. Source orientation is a somewhat hybrid type of parameter. It is considered that small, local displacements of brain activity can be efficiently modeled by a rotating dipole source at some fixed location. Though source orientation is a non-linear parameter in theory, replacing a free-rotating dipole by a triplet of 3 orthogonal dipoles with fixed orientations is a way to express any arbitrary source orientation by a set of 3 – hence linear – amplitude parameters. Non-linear parameters are more difficult to estimate in practice than linear unknowns. The optimal set of source parameters defined from the LS criterion exists and is theoretically unique when sources are constrained to be dipolar (see e.g. (Badia, 2004)). However in practice, non-linear optimization may be trapped by suboptimal values of the source parameters corresponding to a so-called local-minimum of the LS objective. Therefore the practice of multiple dipole fitting is very sensitive to initial conditions e.g., the values assigned to the unknown parameters to initiate the search, and to the number of sources in the model, which increases the possibility of the optimization procedure to be trapped in local, suboptimal LS minima.

In summary, even though localizing a number of elementary dipoles corresponding to the amount of instantaneous observations is theoretically well-posed, we are facing two issues that will drive us to reconsider the source-fitting problem in practice:

- The risk of overfitting the data: meaning that the inverse model may account for the noise components in the observations, and

- Non-linear searches that tend to be trapped in local minima of the LS objective.

A general rule of thumb when the data is noisy and the optimization principle is ruled by non-linear dependency is to keep the complexity of the estimation as low as possible. Taming complexity starts with reducing the number of unknowns so that the estimation problem becomes overdetermined. In experimental sciences, overdeterminacy is not as critical as underdeterminacy. From a pragmatic standpoint, supplementary sensors provide additional information and allow the selection of subsets of channels, which may be less contaminated by noise and artifacts.

The early MEG/EEG literature is abundant in studies reporting on single dipole source models. The somatotopy of primary somatosensory brain regions (Okada, Tanenbaum, Williamson, & Kaufman, 1984, Meunier, Lehéricy, Garnero, & Vidailhet, 2003), primary, tonotopic auditory (Zimmerman, Reite, & Zimmerman, 1981) and visual (Lehmann, Darcey, & Skrandies, 1982) responses are examples of such studies where the single dipole model contributed to the better temporal characterization of primary brain responses.

Later components of evoked fields and potentials usually necessitate more elementary source to be fitted. However, this may be detrimental to the numerical stability and significance of the inverse model. The spatio-temporal dipole model was therefore developed to localize the sources of scalp waveforms that were assumed to be generated by multiple and overlapping brain activations (Scherg & Cramon, 1985). This spatio-temporal model and associated optimization expect that an elementary source is active for a certain duration – with amplitude modulations – while remaining at the same location with the same orientation. This is typical of the introduction of prior information in the MEG/EEG source estimation problem, and this will be further developed in the imaging techniques discussed below.

The number of dipoles to be adjusted is also a model parameter that needs to be estimated. However it leads to difficult, and usually impractical optimization (Waldorp, Huizenga, Nehorai, Grasman, & Molenaar, 2005). Therefore the number of elementary sources in the model is often qualitatively assessed by expert users, which may question the reproducibility of such user-dependent analyses. Hence, special care should be brought to the evaluation of the stability and robustness of the estimated source models. With all that in mind, source localization techniques have proven to be effective, even on complex experimental paradigms (see e.g., (Helenius, Parviainen, Paetau, & Salmelin, 2009).

Signal classification and spatial filtering techniques are efficient alternative approaches in that respect. They have gained considerable momentum in the MEG/EEG community in the recent years. They are discussed in the following subsection.

The inherent difficulties to source localization with multiple generators and noisy data have led signal processors to develop alternative approaches, most notably in the glorious field of radar and sonar in the 1970’s. Rather than attempting to identify discrete sets of sources by adjusting their non-linear location parameters, scanning techniques have emerged and proceeded by systematically sifting through the brain space to evaluate how a predetermined elementary source model would fit the data at every voxel of the brain volume. For this local model evaluation to be specific of the brain location being scanned, contributions from possible sources located elsewhere in the brain volume need to be blocked. Hence, these techniques are known as spatial-filters and beamformers (the simile is a virtual beam being directed and ‘listening’ exclusively at some brain region).

These techniques have triggered tremendous interest and applications in array signal processing and have percolated the MEG/EEG community at several instances (e.g., (Spencer, Leahy, Mosher, & Lewis, 1992) and more recently, (Hillebrand, Singh, Holliday, Furlong, & Barnes, 2005)). At each point of the brain grid, a narrow-band spatial filter is formed and evaluates the contribution to data from an elementary source model – such as a single or a triplet of current dipoles – while contributions from other brain regions are ideally muted, or at least attenuated. (Veen & Buckley, 1988) is a technical introduction to beamformers and excellent further reading.

It is sometimes claimed that beamformers do not solve an inverse problem: this is a bit overstated. Indeed, spatial filters do require a source and a forward model that will be both confronted to the observations. Beamformers scan the entire expected source space and systematically test the prediction of the source and forward models with respect to observations. These predictions compose a distributed score map, which should not be misinterpreted as a current density map. More technically – though no details are given here – the forward model needs to be inverted by the beamformer as well. It only proceeds iteratively by sifting through each source grid point and estimating the output of the corresponding spatial filter. Hence beamformers and spatial filters are truly avatars of inverse modeling.

Beamforming is therefore a convenient method to translate the source localization problem into a signal detection issue. As every method that tackles a complex estimation problem, there are drawbacks to the technique:

- Beamformers depend on the covariance statistics of the noise in the data. These latter may be estimated from the data through sample statistics. However, the number of independent data samples that are necessary for the robust – and numerically stable – estimation of covariance statistics is proportional to the square of the number of data channels, i.e. of sensors. Hence beamformers ideally require long, stationary episodes of data, such as sweeps of ongoing, unaveraged data and experimental conditions where behavioral stationarity ensures some form of statistical stationarity in the data (e.g., ongoing movements). (Cheyne, Bakhtazad, & Gaetz, 2006) have suggested that event-related brain responses can be well captured by beamformers using sample statistics estimated across single trials.

- They are more sensitive to errors in the head model. The filter outputs are typically equivalent to local estimates of SNR. However this latter is not homogeneously distributed everywhere in the brain volume: MEG/EEG signals from activity in deeper brain regions or gyral generators in MEG have weaker SNR than in the rest of the brain. The consequence is side lobe leakages from interfering sources nearby, which impede filter selectivity and therefore, the specificity of source detection (Wax & Anu, 1996);

- Beamformers may be fooled by simultaneous activations occurring in brain regions outside the filter pass-band that are highly correlated with source signals within the pass-band. External sources are interpreted as interferences by the beamformer, which blocks the signals of interest because they bear the same sample statistics than the interference.

Signal processors had long identified these issues and consequently developed multiple signal classification (MUSIC) as an alternative technique ((Schmidt, 1986)). MUSIC assumes that signal and noise components in the data are uncorrelated. Strong theoretical results in information theory show that these components live in separate, high-dimensional data subspaces, which can be identified using e.g., a PCA of the data time series (Golub, 1996). (J. C. Mosher, Baillet, & Leahy, 1999) is an extensive review of signal classification approaches to MEG and EEG source localization.

However, the practical aspects of MUSIC and its variations remain limited by their sensitivity in the accurate definition of the respective signal and noise subspaces. These techniques may be fooled by background brain activity, which signals share similar properties with the event-related responses of interest. An interesting side application of MUSIC-like powerful discrimination ability though has been developed in epilepsy spike-sorting (Ossadtchi et al.., 2004).

In summary, spatial-filters, beamformers and signal classification approaches bring us closer to a distributed representation of the brain electrical activity. As a caveat, the results generated by these techniques are not an estimation of the current density everywhere in the brain. They represent a score map of a source model – generally a current dipole – that is evaluated at the points of a predefined spatial lattice, which sometimes leads to misinterpretations. The localization issue now becomes a signal detection problem within the score map (J. Mosher, Baillet, & Leahy, 2003). The imaging approaches we are about to introduce now, push this detection problem further by estimating the brain current density globally.

Source imaging approaches have developed in parallel to the other techniques discussed above. Imaging source models consist of distributions of elementary sources, generally with fixed locations and orientations, which amplitudes are estimated at once. MEG/EEG source images represent estimations of the global neural current intensity maps, distributed within the entire brain volume or constrained at the cortical surface.

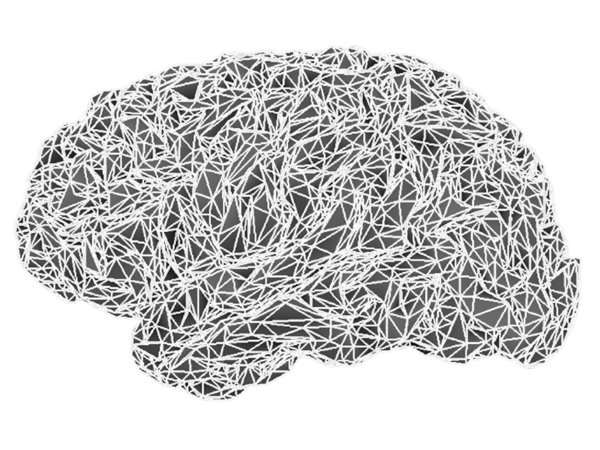

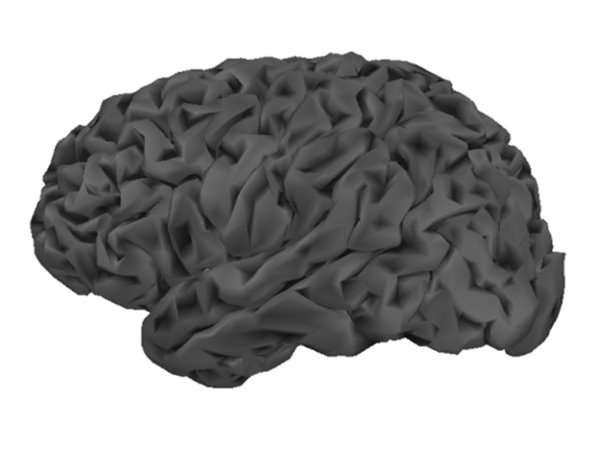

Source image supports consist of either a 3D lattice of voxels or of the nodes of the triangulation of the cortical surface. These latter may be based on a template, or preferably obtained from the subject’s individual MRI and confined to a mask of the grey matter. Multiple academic software packages perform the necessary segmentation and tessellation processes from high-contrast T1-weighted MR image volumes.

The cortical surface, tessellated at two resolutions, using: (top row) 10,034 vertices (20,026 triangles with 10 mm2 average surface area) and (bottom row) 79,124 vertices (158,456 triangles with 1.3 mm2 average surface area).

As discussed elsewhere in these pages, the cortically-constrained image model derives from the assumption that MEG/EEG data originates essentially from large cortical assemblies of pyramidal cells, with currents generated from post-synaptic potentials flowing orthogonally to the local cortical surface. This orientation constraint can either be strict (Dale & Sereno, 1993) or relaxed by authorizing some controlled deviation from the surface normal (Lin, Belliveau, Dale, & Hamalainen, 2006).

In both cases, reasonable spatial sampling of the image space requires several thousands (typically ~10000) of elementary sources. Consequently, though the imaging inverse problem consists in estimating only linear parameters, it is dramatically underdetermined.

Just like in the context of source localization where e.g., the number of sources is a restrictive prior as a remedy to ill-posedness, imaging models need to be complemented by a priori information. This is properly formulated with the mathematics of regularization as we shall now briefly review.

Adding priors to the imaging model can be adequately formalized in the context of Bayesian inference where solutions to inverse modeling satisfy both the fit to observations – given some probabilistic model of the nuisances – and additional priors. From a parameter estimation perspective, the maximum of the a posteriori probability distribution of source intensity, given the observations could be considered as the ‘best possible model’. This maximum a posteriori (MAP) estimate has been extremely successful in the digital image restoration and reconstruction communities. (Geman & Geman, 1984) is a masterpiece reference of the genre. The MAP is obtained in Bayesian statistics through the optimization of the mixture of the likelihood of the noisy data – i.e., of the predictive power of a given source model – with the a priori probability of a given source model.

We do not want to detail the mathematics of Bayesian inference any further here as this would reach outside the objectives of these pages. Specific recommended further reading includes (Demoment, 1989), for a Bayesian discussion on regularization and (Baillet, Mosher, & Leahy, 2001), for an introduction to MEG/EEG imaging methods, also in the Bayesian framework.

From a practical standpoint, the priors on the source image models may take multiple faces: promote current distributions with high spatial and temporal smoothness, penalize models with currents of unrealistic, non-physiologically plausible amplitudes, favor the adequation with an fMRI activation maps, or prefer source image models made of piecewise homogeneous active regions, etc. An appealing benefit from well-chosen priors is that it may ensure the uniqueness of the optimal solution to the imaging inverse problem, despite its original underdeterminacy.

Because relevant priors for MEG/EEG imaging models are plethoric, it is important to understand that the associated source estimation methods usually belong to the same technical background. Also, the selection of image priors can be seen as arbitrary and subjective an issue as the selection of dipoles in the source localization techniques we have reviewed previously. Comprehensive solutions for this model selection issue are now emerging and will be briefly reviewed further below.

The free parameters of the imaging model are the amplitudes of the elementary source currents distributed on the brain’s geometry. The non-linear parameters (e.g., the elementary source locations) now become fixed priors as provided by anatomical information. The model estimation procedure and the very existence of a unique solution strongly depend on the mathematical nature of the image prior.

A widely-used prior in the field of image reconstruction considers that the expected source amplitudes be as small as possible on average. This is the well-described minimum-norm (MN) model. Technically speaking, we are referring to the L2-norm; the objective cost function ruling the model estimation is quadratic in the source amplitudes, with a unique analytical solution (Tarantola, 2004). The computational simplicity and uniqueness of the MN model has been very attractive in MEG/EEG early on (Wang et al.., 1992).

The basic MN estimate is problematic though as it tends to favor the most superficial brain regions (e.g., the gyral crowns) and underestimate contributions from deeper source areas (such as sulcal fundi) (Fuchs, Wagner, Köhler, & Wischmann, 1999).

As a remedy, a slight alteration of the basic MN estimator consists in weighting each elementary source amplitude by the inverse of the norm of its contribution to sensors. Such depth weighting yields a weighted MN (WMN) estimate, which still benefits from uniqueness and linearity in the observations as the basic MN (Lin, Witzel, et al.., 2006).

Despite their robustness to noise and simple computation, it is relevant to question the neurophysiological validity of MN priors. Indeed – though reasonably intuitive – there is no evidence that neural currents would systematically match the principle of minimal energy. Some authors have speculated that a more physiologically relevant prior would be that the norm of spatial derivatives (e.g., surface or volume gradient or Laplacian) of the current map be minimized (see LORETA method in (Pascual-Marqui, Michel, & Lehmann, 1994)). As a general rule of thumb however, all MN-based source imaging approaches overestimate the smoothness of the spatial distribution of neural currents. Quantitative and qualitative empirical evidence however demonstrate spatial discrimination of reasonable range at the sub-lobar brain scale (Darvas, Pantazis, Kucukaltun-Yildirim, & Leahy, 2004, Sergent et al.., 2005).

Most of the recent literature in regularized imaging models for MEG/EEG consists in struggling to improve the spatial resolution of the MN-based models (see (Baillet, Mosher, & Leahy, 2001) for a review) or to reduce the degree of arbitrariness involved in selected a generic source model a priori (Mattout, Phillips, Penny, Rugg, & Friston, 2006, Stephan, Penny, Daunizeau, Moran, & Friston, 2009). This results in notable improvements in theoretical performances, though with higher computational demands and practical optimization issues.

As a general principle, we are facing the dilemma of knowing that all priors about the source images are certainly abusive, hence that the inverse model is approximative, while hoping it is just not too approximative. This discussion is recurrent in the general context of estimation theory and model selection as we shall discuss in the next section.

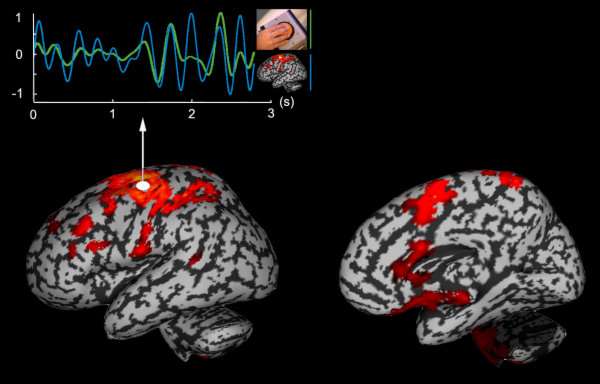

![Distributed source imaging of the [120,300] ms time interval following the presentation of the target face object](/-/media/MCW/Departments/Magnetoencephalography-Program/figure13.jpg)

Distributed source imaging of the [120,300] ms time interval following the presentation of the target face object in the visual RSVP oddball paradigm described before. The images show a slightly smoothed version of one participant’s cortical surface. Colors encode the contrast of MEG source amplitudes between responses to target versus control faces. Visual responses are detected by 120ms and rapidly propagate anteriorly. By 250 ms onwards, strong anterior mesial responses are detected in the cingular cortex. These latter are the main contributors of the brain response to target detection.

Appraisal of MEG/EEG Source Models

Questions like: ‘How different is the dipole location between these two experimental conditions?’ and ‘Are source amplitudes larger in such condition that in a control condition?’ belong to statistical inference from experimental data. The basic problem of interest here is hypothesis testing, which is supposed to potentially invalidate a model under investigation. Here, the model must be understood at a higher hierarchical level than when talking about e.g., an MEG/EEG source model. It is supposed to address the neuroscience question that has motivated data acquisition and the experimental design (Guilford, P., & Fruchter, B., 1978).

In the context of MEG/EEG, the population samples that will support the inference are either trials or subjects, for hypothesis testing at the individual and group levels, respectively.

As in the case of the estimation of confidence intervals, both parametric and non-parametric approaches to statistical inference can be considered. There is no space here for a comprehensive review of tools based on parametric models. They have been and still are extensively studied in the fMRI and PET communities – and recently adapted to EEG and MEG (Kiebel, Tallon-Baudry, & Friston, 2005) – and popularized with software toolboxes such as SPM (K. Friston, Ashburner, Kiebel, Nichols, & Penny, 2007).

Non-parametric approaches such as permutation tests have emerged for statistical inference applied to neuroimaging data (Nichols & Holmes, 2002, Pantazis, Nichols, Baillet, & Leahy, 2005). Rather than applying transformations to the data to secure the assumption of normally-distributed measures, non-parametric statistical tests take the data as they are and are robust to departures from normal distributions.

In brief, hypothesis testing forms an assumption about the data that the researcher is interested about questioning. This basic hypothesis is called the null hypothesis, H0, and is traditionally formulated to translate no significant finding in the data e.g., ‘There are no differences in the MEG/EEG source model between two experimental conditions’. The statistical test will express the significance of this hypothesis and evaluate the probability that the statistics in question would be obtained just by chance. In other words, the data from both conditions are interchangeable under the H0 hypothesis. This is literally what permutation testing does. It computes the sample distribution of estimated parameters under the null hypothesis and verifies whether a statistics of the original parameter estimates was likely to be generated under this law.

We shall now review rapidly the principles of multiple hypotheses testing from the same sample of measurements, which induces errors when multiple parameters are being tested at once. This issue pertains to statistical inference both at the individual and group levels. Samples therefore consist of repetitions (trials) of the same experiment in the same subject, or of the results from the same experiment within a set of subjects, respectively. This distinction is not crucial at this point. We shall however point at the issue of spatial normalization of the brain across subjects either by applying normalization procedures (Ashburner & Friston, 1997) or by the definition of a generic coordinate system onto the cortical surface (Fischl, Sereno, & Dale, 1999, Mangin et al.., 2004).

The outcome of a test will evaluate the probability p that the statistics computed from the data samples be issued from complete chance as expressed by the null hypothesis. The investigator needs to fix a threshold on p a priori, above which H0 cannot be rejected, thereby corroborating H0. Tests are designed to be computed once from the data sample so that the error – called the type I error – consisting in accepting H0 while it is invalid stays below the predefined p-value.

If the same data sample is used several times for several tests, we multiply the chances that we commit a type I error. This is particularly critical when running tests on sensor or source amplitudes of an imaging model as the number of tests is on the order of 100 and even 10,000, respectively. In this latter case, a 5% error over 10,000 tests is likely to generate 500 occurrences of false positives by wrongly rejecting H0. This is obviously not desirable and this is the reason why this so-called family-wise error rate (FWER) should be kept under control.

Parametric approaches to address this issue have been elaborated using the theory of random fields and have gained tremendous popularity through the SPM software (K. Friston et al.., 2007). These techniques have been extended to electromagnetic source imaging but are less robust to departure from normality than non-parametric solutions. The FWER in non parametric testing can be controlled by using e.g., the statistics of the maximum over the entire source image or topography at the sensor level (Pantazis et al.., 2005).

The emergence of statistical inference solutions adapted to MEG/EEG has brought electromagnetic source localization and imaging to a considerable degree of maturity that is quite comparable to other neuroimaging techniques. Most software solutions now integrate sound solutions to statistical inference for MEG and EEG data, and this is a field that is still growing rapidly.

MEG functional connectivity and statistical inference at the group level illustrated: Jerbi et al. (2007) have revealed a cortical functional network involved in hand movement coordination at low frequency (4Hz). The statistical group inference first consisted on fitting for each trial in the experiment, a distributed source model constrained to the individual anatomy of each of the 14 subjects involved. The brain area with maximum coherent activation with instantaneous hand speed was identified within the contralateral sensorimotor area (white dot). The traces at the top illustrate excellent coherence in the [3,5]Hz range between these measurements (hand speed in green and M1 motor activity in blue). Secondly, the search for brain areas with activity in significant coherence with M1 revealed a larger distributed network of regions. All subjects were coregistered to a brain surface template in Talairach normalized space with the corresponding activations interpolated onto the template surface. A non-parametric t-test contrast was completed using permutations between rest and task conditions (p<0.01).

We have discussed how fitting dipoles to a data time segment may be quite sensitive to initial conditions and therefore, subjective. Similarly, imaging source models suggest that each brain location is active, potentially. It is therefore important to evaluate the confidence one might acknowledge to a given model. In other words, we are now looking for error bars that would define a confidence interval about the estimated values of a source model.

Signal processors have developed a principled approach to what they have coined as ‘detection and estimation theories’ (Kay, 1993). The main objective consists in understanding how certain one can be about the estimated parameters of a model, given a model for the noise in the data. The basic approach consists in considering the estimated parameters (e.g., source locations) as distributed through random variables. Parametric estimation of error bounds on the source parameters consists in estimating their bias and variance.

Bias is an estimation of the distance between the true value and the expectancy of estimated parameter values due to perturbations. The definition of variance follows immediately. Cramer-Rao lower bounds (CRLB) on the estimator’s variance can be explicitly computed using an analytical solution to the forward model and given a model for perturbations (e.g., with distribution under a normal law). In a nutshell, the tighter the CRLB, the more confident one can be about the estimated values. (J. C. Mosher, Spencer, Leahy, & Lewis, 1993) have investigated this approach using extensive Monte-Carlo simulations, which evidenced a resolution of a few millimeters for single dipole models. These results were later confirmed by phantom studies (Leahy, Mosher, Spencer, Huang, & Lewine, 1998, Baillet, Riera, et al.., 2001). CRLB increased markedly for two-dipole models, thereby demonstrating their extreme sensitivity and instability.

Recently, non-parametric approaches to the determination of error bounds have greatly benefited from the commensurable increase in computational power. Jackknife and bootstrap techniques proved to be efficient and powerful tools to estimate confidence intervals on MEG/EEG source parameters, regardless of the nature of perturbations and of the source model.